Improving Recruiting Efficiency with a Hybrid Bulk Data Processing Framework

LinkedIn Engineering

JANUARY 19, 2024

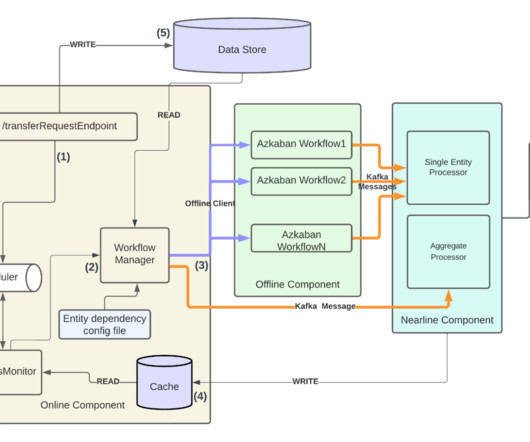

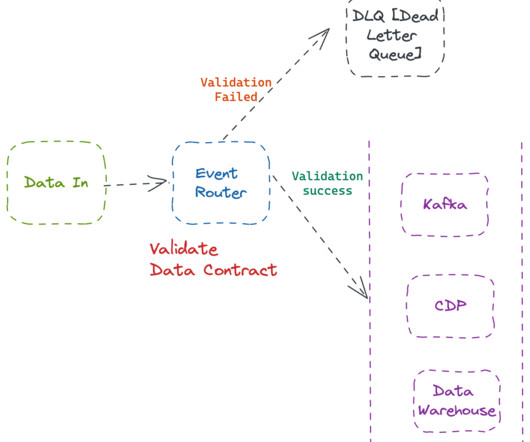

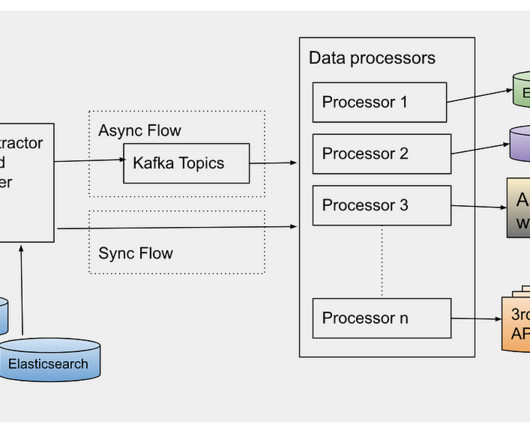

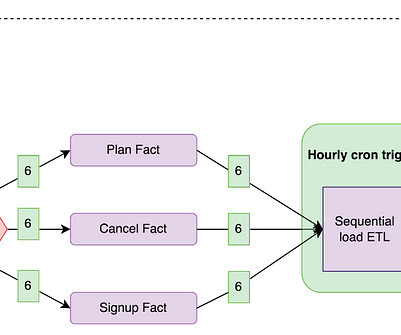

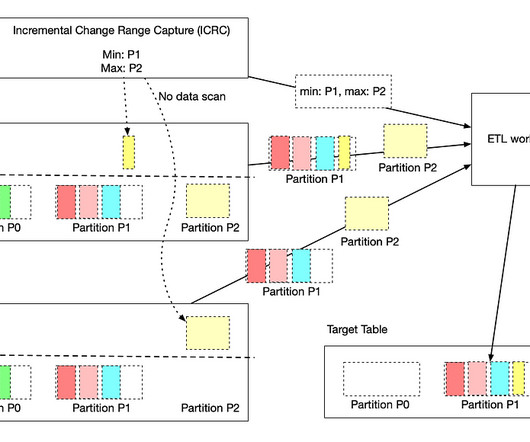

Data consistency, feature reliability, processing scalability, and end-to-end observability are key drivers to ensuring business as usual (zero disruptions) and a cohesive customer experience. With our new data processing framework, we were able to observe a multitude of benefits, including 99.9%

Let's personalize your content