Practical Magic: Improving Productivity and Happiness for Software Development Teams

LinkedIn Engineering

DECEMBER 19, 2023

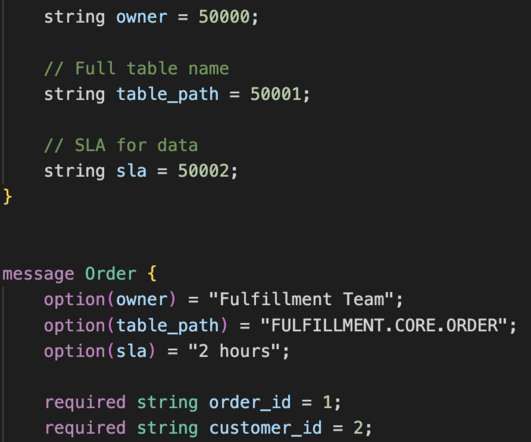

We discuss the difference between “data” and “insights,” when you want to use qualitative (objective) data vs. qualitative (subjective) data , how to drive decisions (and provide the right data for your audience), and what data you should collect (including some thoughts about data schemas for engineering data).

Let's personalize your content