A Comprehensive Guide Of Snowflake Interview Questions

Analytics Vidhya

FEBRUARY 1, 2023

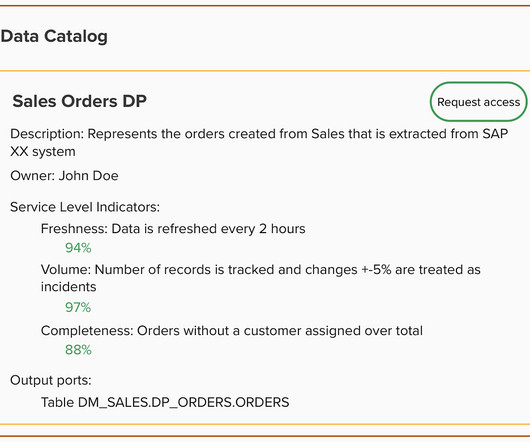

Introduction Nowadays, organizations are looking for multiple solutions to deal with big data and related challenges. If you’re preparing for the Snowflake interview, […] The post A Comprehensive Guide Of Snowflake Interview Questions appeared first on Analytics Vidhya.

Let's personalize your content