How to get datasets for Machine Learning?

Knowledge Hut

APRIL 26, 2024

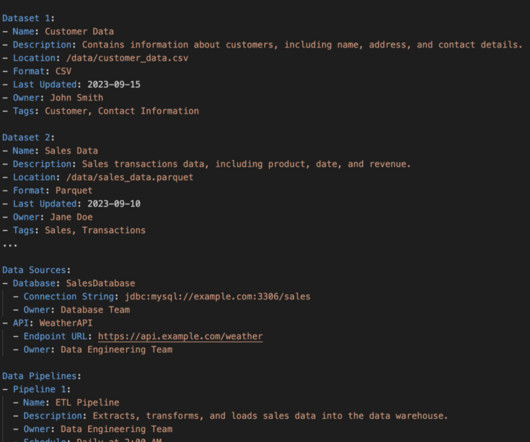

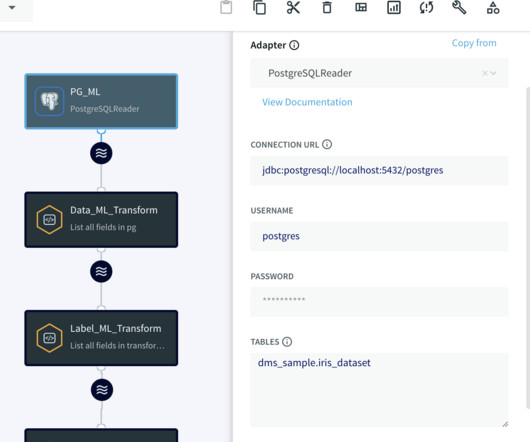

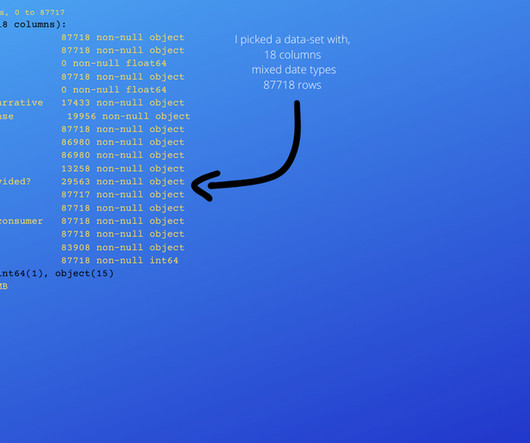

Datasets are the repository of information that is required to solve a particular type of problem. Also called data storage areas , they help users to understand the essential insights about the information they represent. Datasets play a crucial role and are at the heart of all Machine Learning models.

Let's personalize your content