A Definitive Guide to Using BigQuery Efficiently

Towards Data Science

MARCH 5, 2024

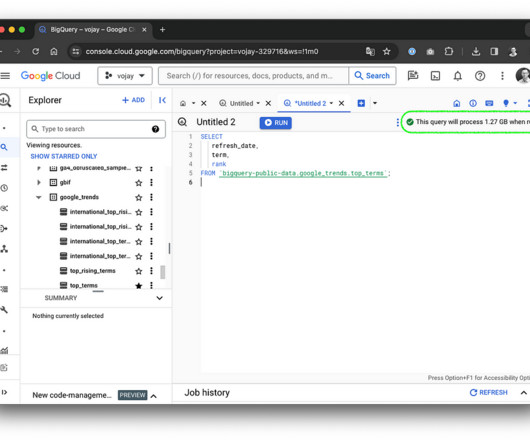

With on-demand pricing, you will generally have access to up to 2000 concurrent slots, shared among all queries in a single project, which is more than enough in most cases. Choosing the right model depends on your data access patterns and compression capabilities. GB / 1024 = 0.0056 TB * $8.13 = $0.05 in europe-west3.

Let's personalize your content