Open Sourcing the Netflix Domain Graph Service Framework: GraphQL for Spring Boot

Netflix Tech

FEBRUARY 3, 2021

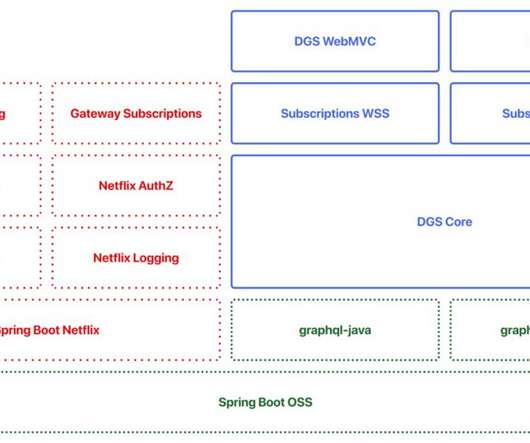

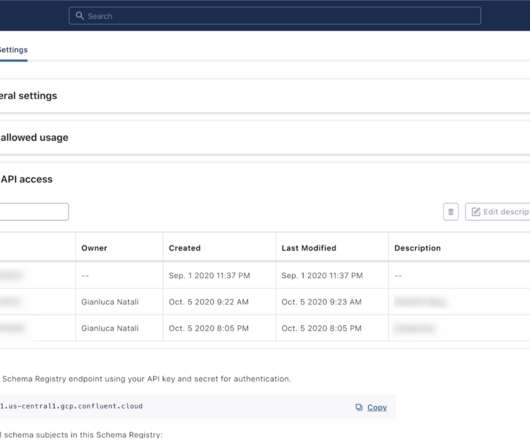

By Paul Bakker and Kavitha Srinivasan , Images by David Simmer , Edited by Greg Burrell Netflix has developed a Domain Graph Service (DGS) framework and it is now open source. The DGS framework simplifies the implementation of GraphQL, both for standalone and federated GraphQL services. Our framework is battle-hardened by our use at scale. By open-sourcing the project, we hope to contribute to the Java and GraphQL communities and learn from and collaborate with everyone who will be using the fra

Let's personalize your content