Monte Carlo Announces Delta Lake, Unity Catalog Integrations To Bring End-to-End Data Observability to Databricks

Monte Carlo

JUNE 28, 2022

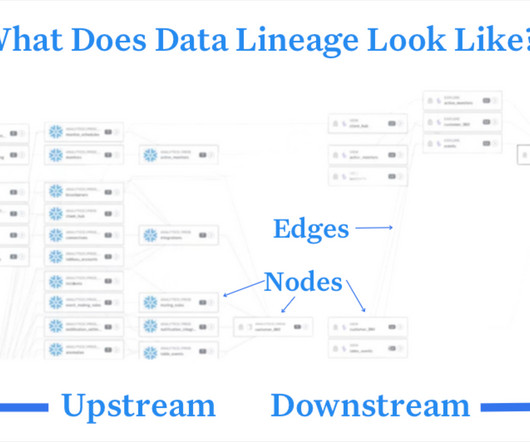

Traditionally, data lakes held raw data in its native format and were known for their flexibility, speed, and open source ecosystem. By design, data was less structured with limited metadata and no ACID properties. Unity Catalog The Unity Catalog unifies metastores, catalogs, and metadata within Databricks.

Let's personalize your content