Comparing Performance of Big Data File Formats: A Practical Guide

Towards Data Science

JANUARY 17, 2024

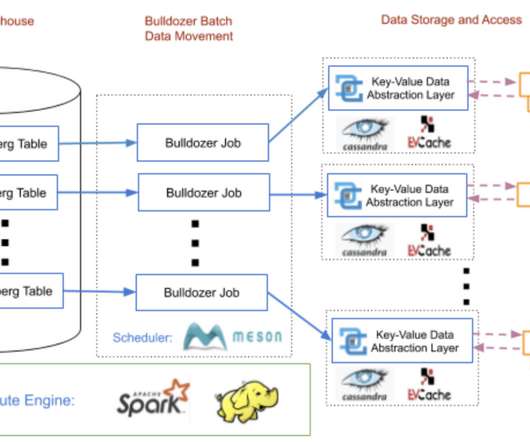

These are key in nearly all data pipelines, allowing for efficient data storage and easier querying and information extraction. They are designed to handle the challenges of big data like size, speed, and structure. Data engineers often face a plethora of choices.

Let's personalize your content