Cloud authentication and data processing jobs

Waitingforcode

FEBRUARY 3, 2023

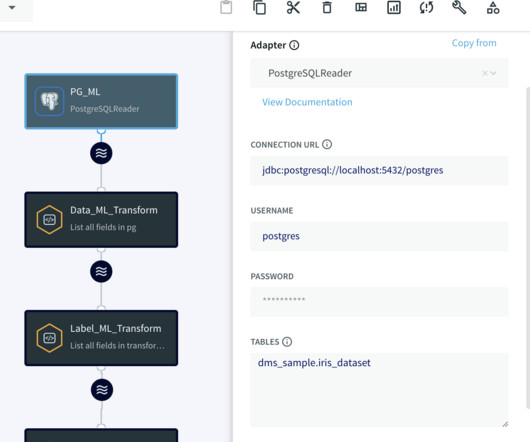

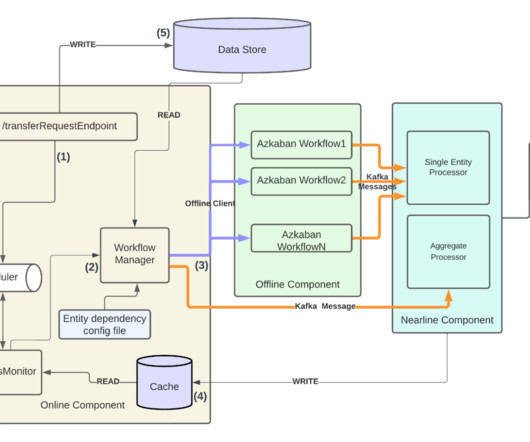

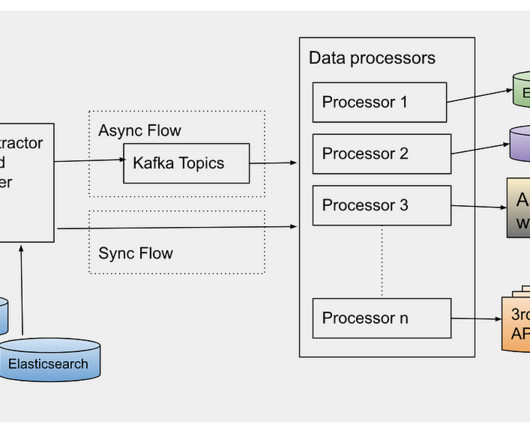

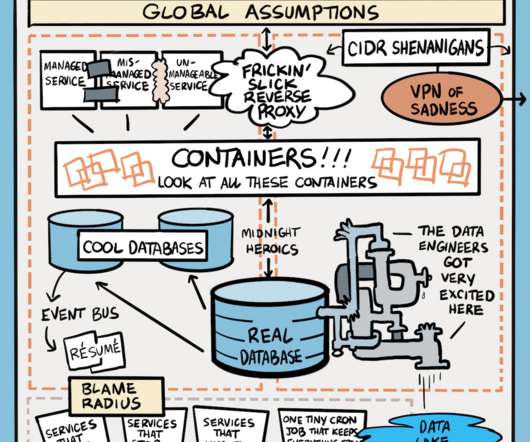

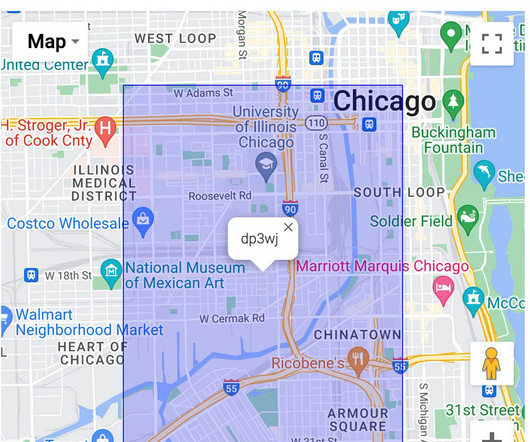

Setting a data processing layer up has several phases. You need to write the job, define the infrastructure, CI/CD pipeline, integrate with the data orchestration layer, and finally, ensure the job can access the relevant datasets. Let's see!

Let's personalize your content