Data Ingestion: 7 Challenges and 4 Best Practices

Monte Carlo

MARCH 14, 2023

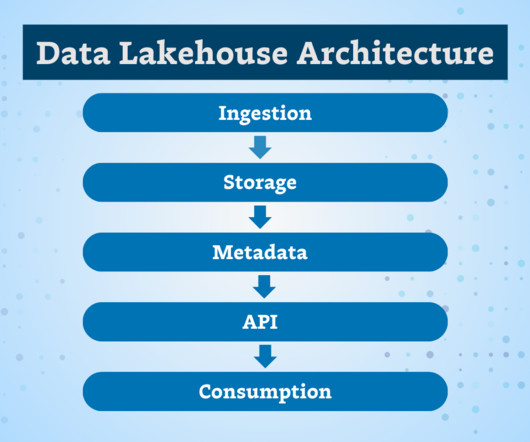

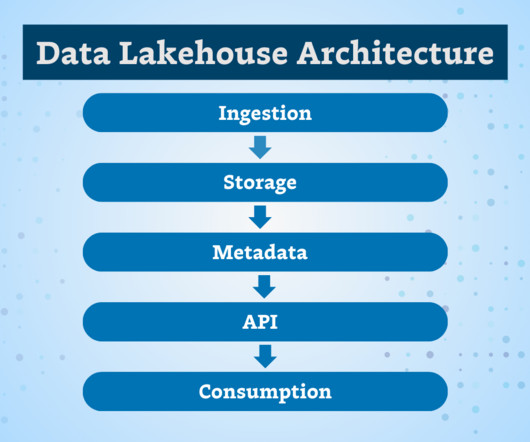

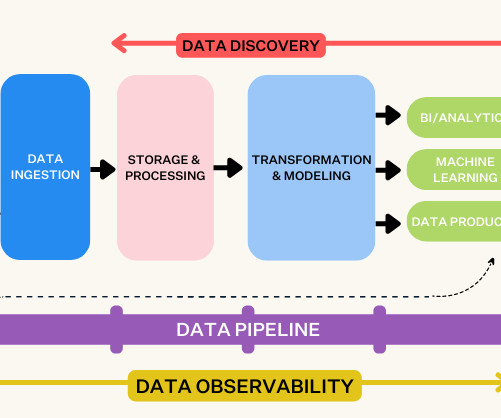

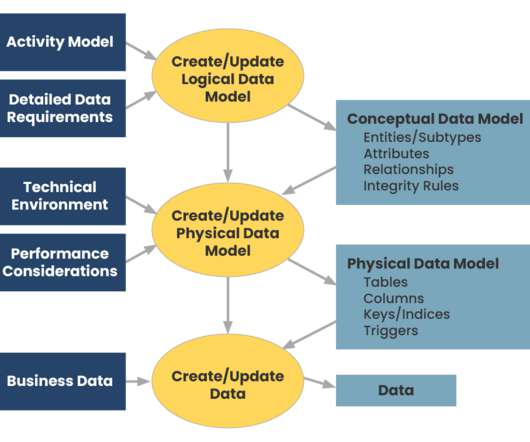

Data ingestion is the process of collecting data from various sources and moving it to your data warehouse or lake for processing and analysis. It is the first step in modern data management workflows. Table of Contents What is Data Ingestion? Decision making would be slower and less accurate.

Let's personalize your content