ThoughtSpot Sage: data security with large language models

ThoughtSpot

MAY 31, 2023

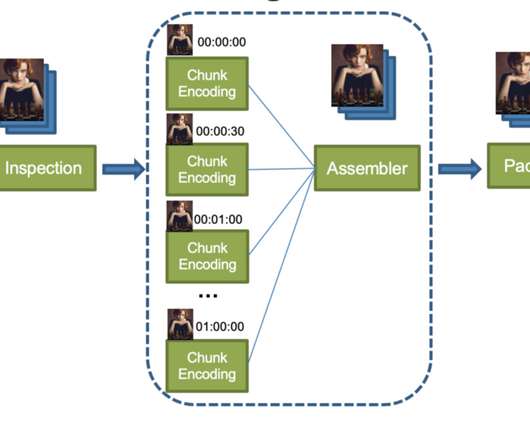

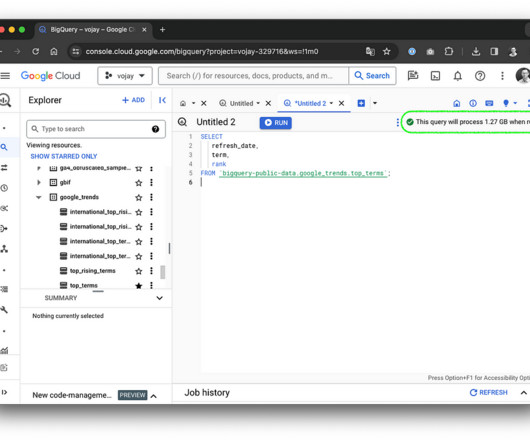

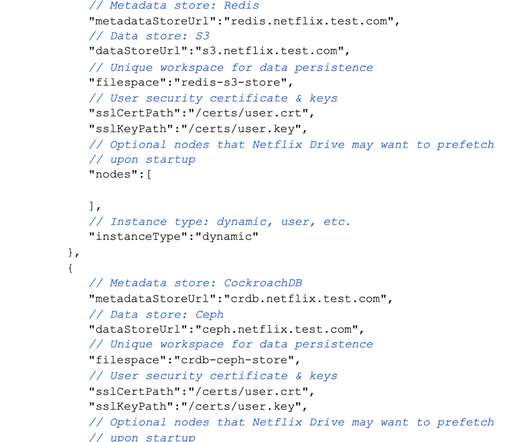

The architecture is designed to be resilient against new-age attacks against LLMs like prompt injection and prompt leaks. Architecture Let's start with the big picture and tackle how we adjusted our cloud architecture with additional internal and external interfaces to integrate LLM.

Let's personalize your content