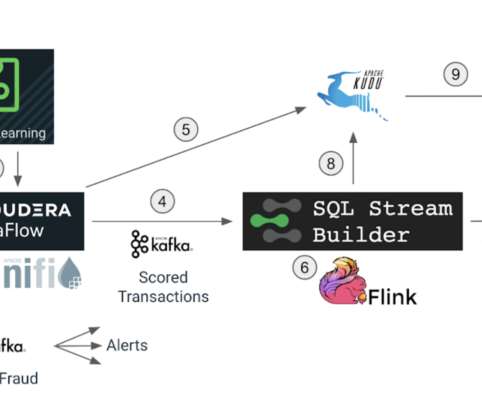

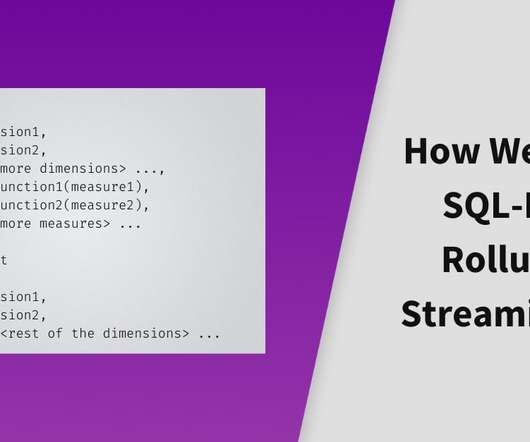

SQL Streambuilder Data Transformations

Cloudera

FEBRUARY 21, 2023

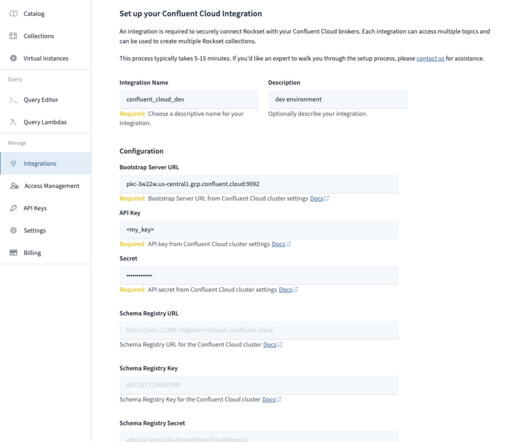

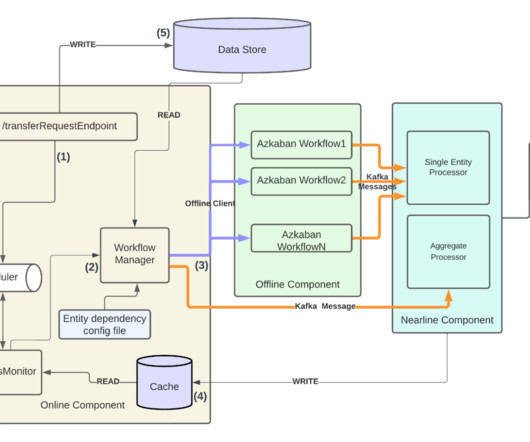

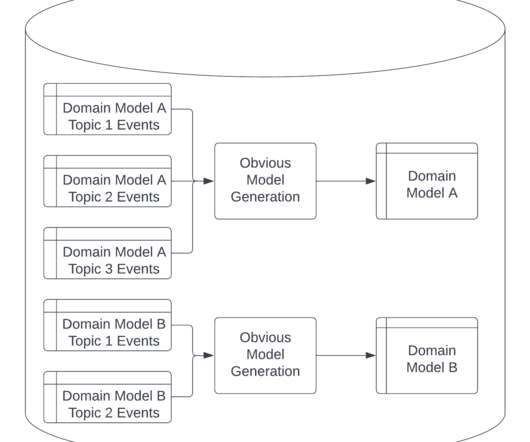

SQL Stream Builder (SSB) is a versatile platform for data analytics using SQL as a part of Cloudera Streaming Analytics, built on top of Apache Flink. It enables users to easily write, run, and manage real-time continuous SQL queries on stream data and a smooth user experience.

Let's personalize your content