Securely Scaling Big Data Access Controls At Pinterest

Pinterest Engineering

JULY 25, 2023

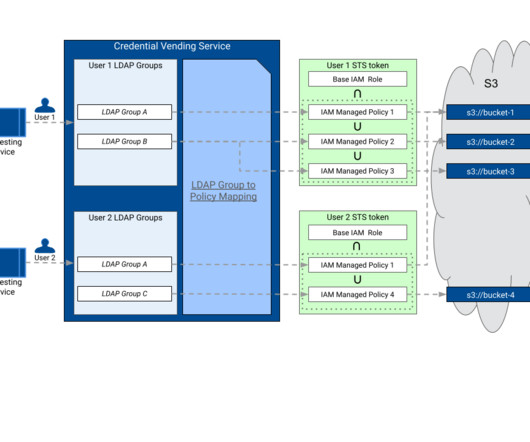

Each dataset needs to be securely stored with minimal access granted to ensure they are used appropriately and can easily be located and disposed of when necessary. Consequently, access control mechanisms also need to scale constantly to handle the ever-increasing diversification.

Let's personalize your content