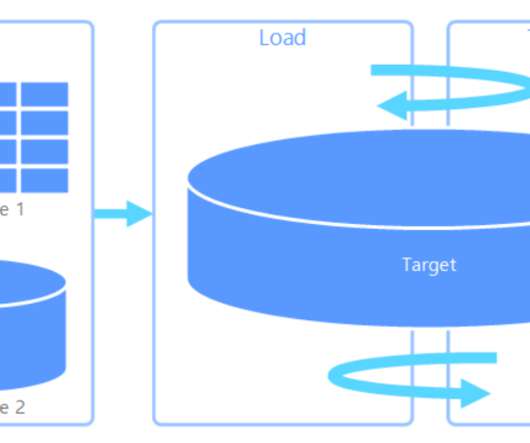

Manufacturing Data Ingestion into Snowflake

Snowflake

JANUARY 26, 2023

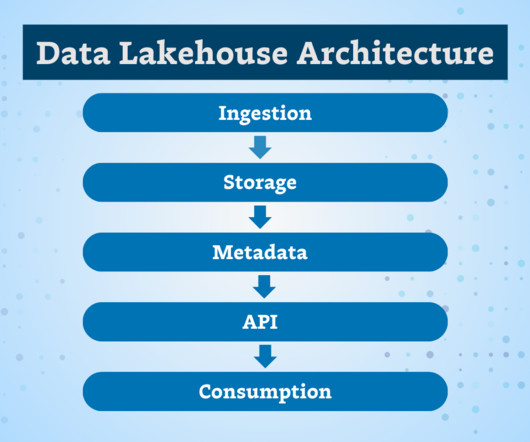

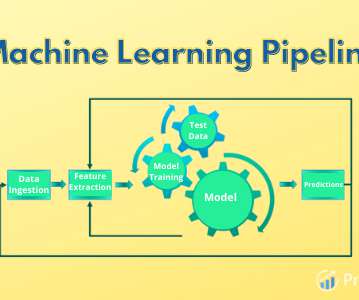

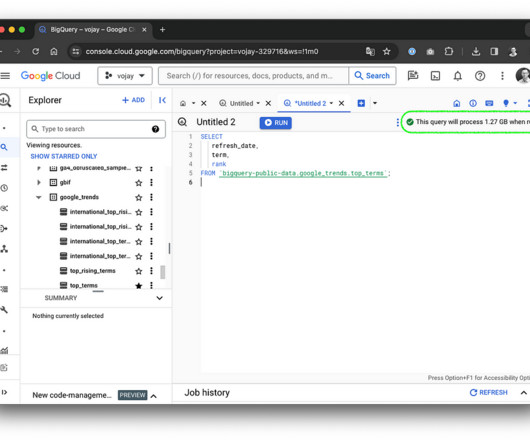

Accessing data from the manufacturing shop floor is one of the key topics of interest with the majority of cloud platform vendors due to the pace of Industry 4.0 Working with our partners, this architecture includes MQTT-based data ingestion into Snowflake. Industry 4.0, Stay tuned for more insights on Industry 4.0

Let's personalize your content