Data ingestion pipeline with Operation Management

Netflix Tech

MARCH 7, 2023

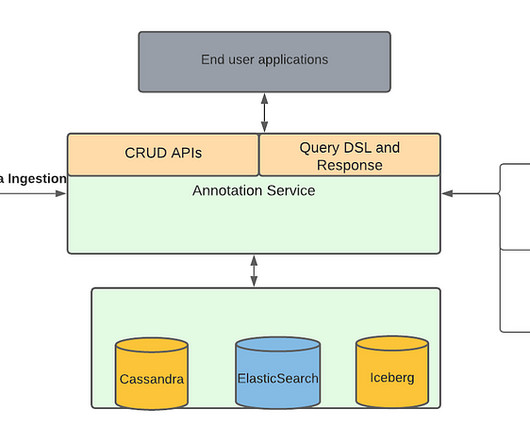

These media focused machine learning algorithms as well as other teams generate a lot of data from the media files, which we described in our previous blog , are stored as annotations in Marken. We refer the reader to our previous blog article for details. in a video file. This new operation is marked to be in STARTED state.

Let's personalize your content