1.5 Years of Spark Knowledge in 8 Tips

Towards Data Science

DECEMBER 24, 2023

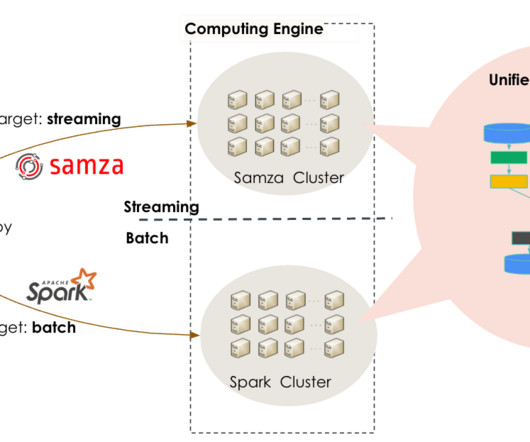

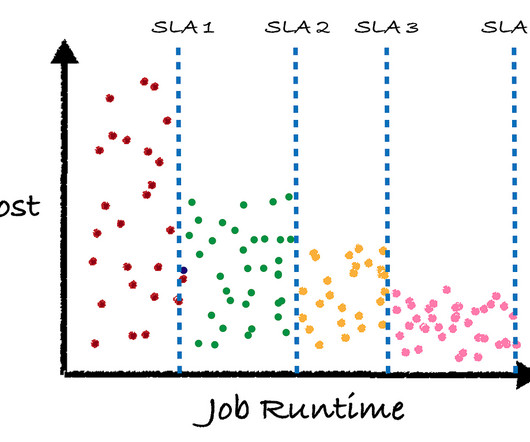

My learnings from Databricks customer engagements Figure 1: a technical diagram of how to write apache spark. After working with ~15 of the largest retail organizations for the past 18 months, here are the Spark tips I commonly repeat. 0 — Quick Review Quickly, let’s review what spark does… Spark is a big data processing engine.

Let's personalize your content