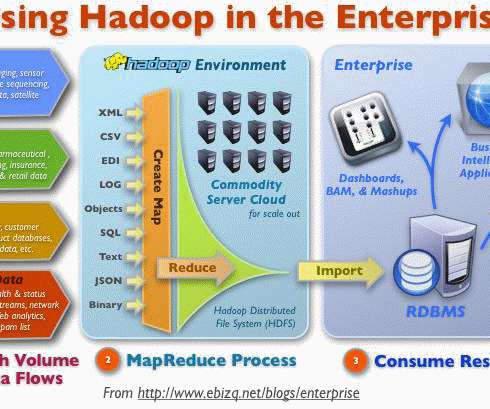

Sqoop vs. Flume Battle of the Hadoop ETL tools

ProjectPro

OCTOBER 28, 2015

Apache Hadoop is synonymous with big data for its cost-effectiveness and its attribute of scalability for processing petabytes of data. Data analysis using hadoop is just half the battle won. Getting data into the Hadoop cluster plays a critical role in any big data deployment.

Let's personalize your content