Complete Guide to Data Ingestion: Types, Process, and Best Practices

Databand.ai

JULY 19, 2023

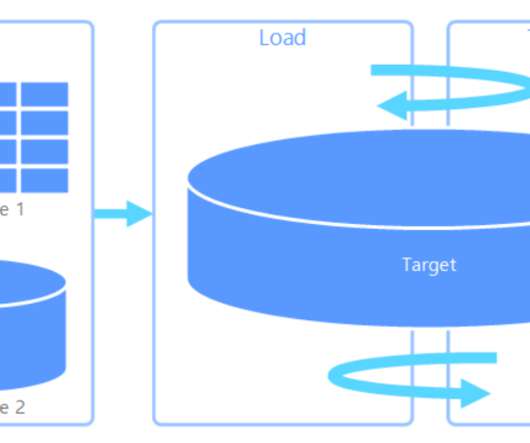

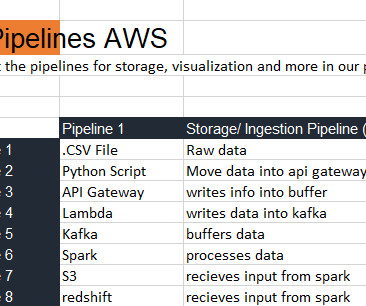

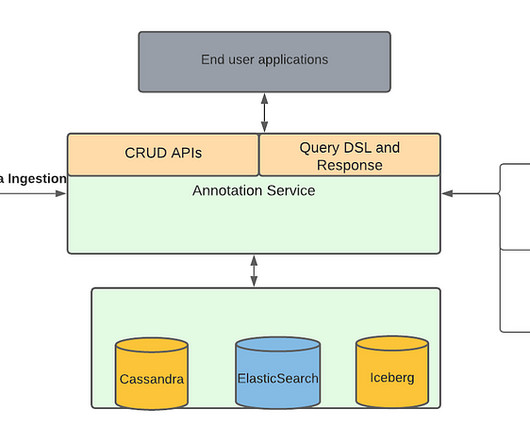

Complete Guide to Data Ingestion: Types, Process, and Best Practices Helen Soloveichik July 19, 2023 What Is Data Ingestion? Data Ingestion is the process of obtaining, importing, and processing data for later use or storage in a database. In this article: Why Is Data Ingestion Important?

Let's personalize your content