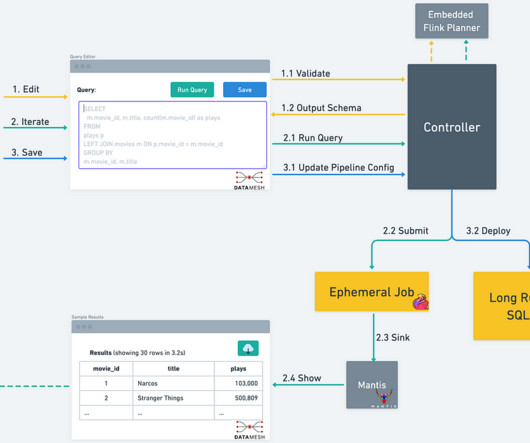

Projects in SQL Stream Builder

Cloudera

MAY 1, 2023

Businesses everywhere have engaged in modernization projects with the goal of making their data and application infrastructure more nimble and dynamic. Tracking changes in a project As any software project, SSB projects are constantly evolving as users create or modify resources, run queries and create jobs.

Let's personalize your content