AWS Glue-Unleashing the Power of Serverless ETL Effortlessly

ProjectPro

FEBRUARY 8, 2023

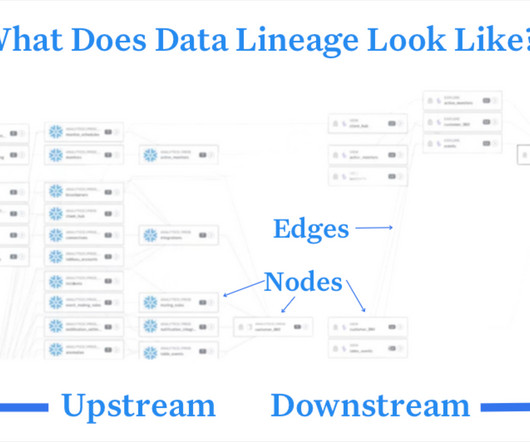

Let us dive deeper into this data integration solution by AWS and understand how and why big data professionals leverage it in their data engineering projects. When Glue receives a trigger, it collects the data, transforms it using code that Glue generates automatically, and then loads it into Amazon S3 or Amazon Redshift.

Let's personalize your content