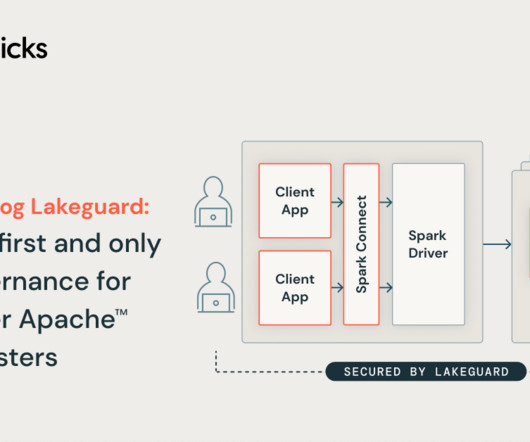

Unity Catalog Lakeguard: Industry-first and only data governance for multi-user Apache™ Spark clusters

databricks

APRIL 24, 2024

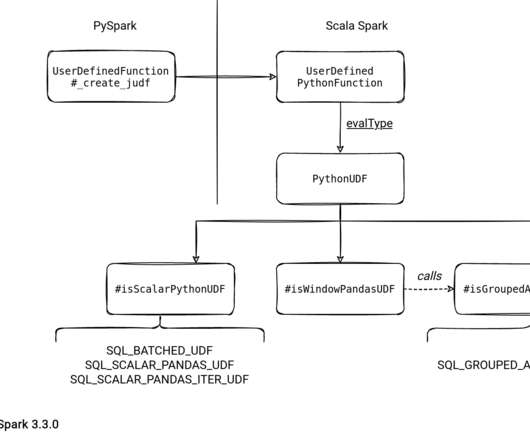

Unlock the power of Apache Spark™ with Unity Catalog Lakeguard on Databricks Data Intelligence Platform. Run SQL, Python & Scala workloads with full data governance & cost-efficient multi-user compute.

Let's personalize your content