What are Data Insights? Definition, Differences, Examples

Knowledge Hut

JANUARY 18, 2024

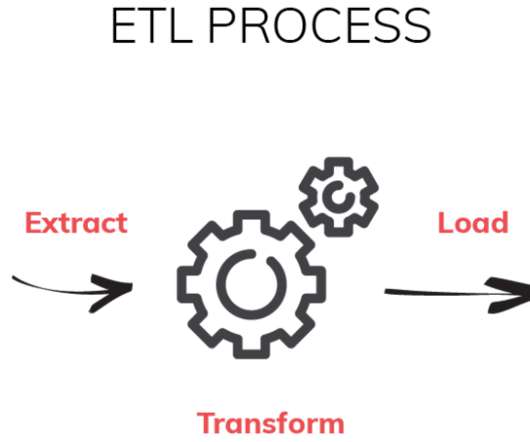

We live in the digital world, where we have the access to a large volume of information. However, while anyone may access raw data, you can extract relevant and reliable information from the numbers that will determine whether or not you can achieve a competitive edge for your company.

Let's personalize your content