Fundamentals of Apache Spark

Knowledge Hut

MAY 3, 2024

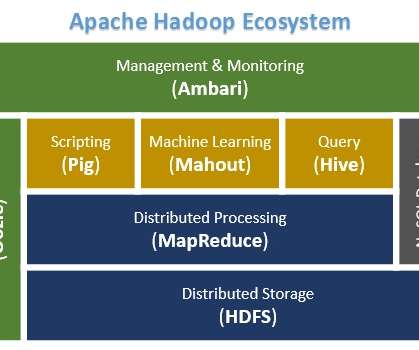

Before getting into Big data, you must have minimum knowledge on: Anyone of the programming languages >> Core Python or Scala. Spark installations can be done on any platform but its framework is similar to Hadoop and hence having knowledge of HDFS and YARN is highly recommended. Basic knowledge of SQL. Yarn etc) Or, 2.

Let's personalize your content