Threads: The inside story of Meta’s newest social app

Engineering at Meta

SEPTEMBER 7, 2023

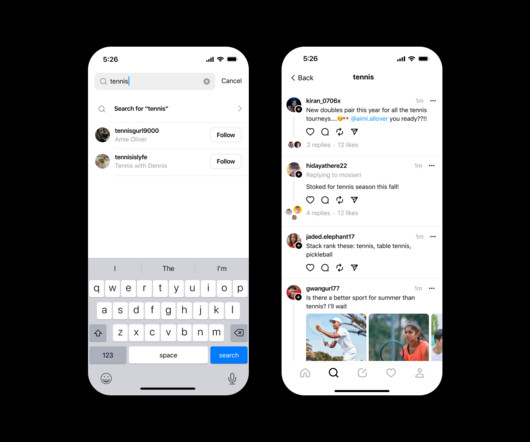

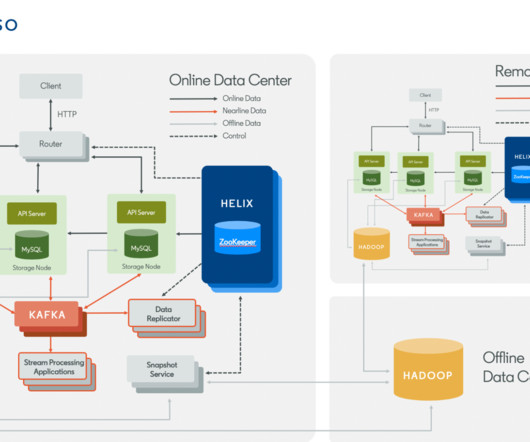

Earlier this year, a small team of engineers at Meta started working on an idea for a new app. It would have all the features people expect from a text-based conversations app, but with one very key, distinctive goal – being an app that would allow people to share their content across multiple platforms. We wanted to build a decentralized (or federated) app that would enable people to post content that is viewable by anyone on other social apps, and vice versa.

Let's personalize your content