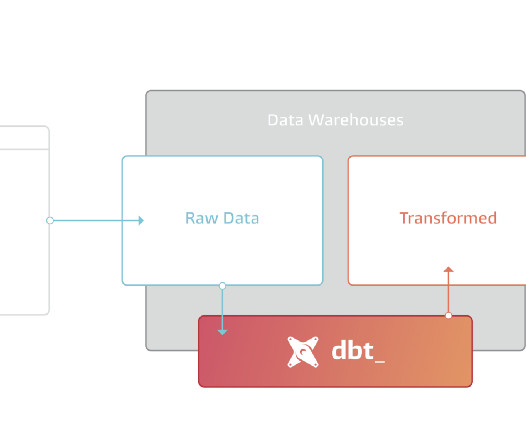

How to get started with dbt

Christophe Blefari

MARCH 1, 2023

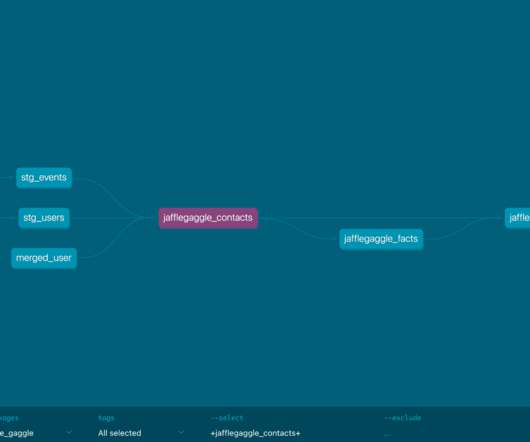

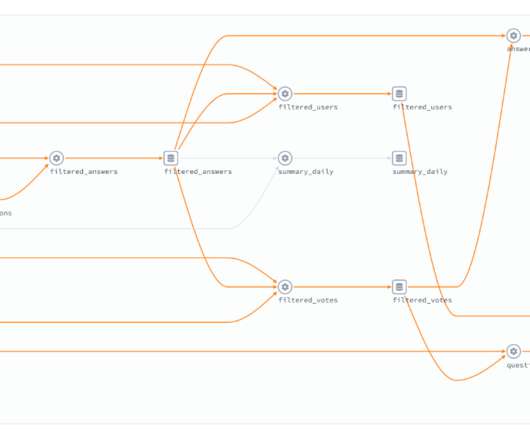

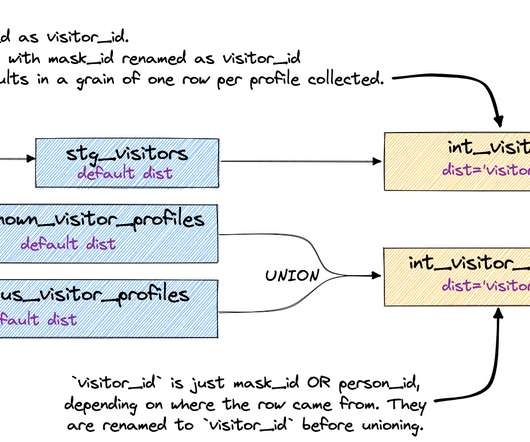

a model — a model is a select statement that can be materialised as a table or as a view. The models are most the important dbt object because they are your data assets. All your business logic will be in the model select statements. You can also add metadata on models (in YAML). We call this a DAG.

Let's personalize your content