Apache Ozone Metadata Explained

Cloudera

JUNE 2, 2021

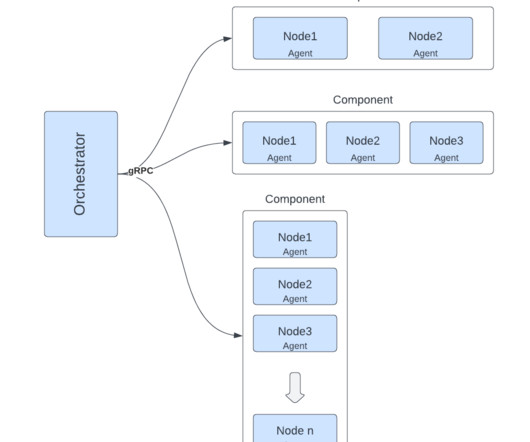

Apache Ozone is a distributed object store built on top of Hadoop Distributed Data Store service. As an important part of achieving better scalability, Ozone separates the metadata management among different services: . Ozone Manager (OM) service manages the metadata of the namespace such as volume, bucket and keys.

Let's personalize your content