Best Practices For Loading and Querying Large Datasets in GCP BigQuery

Analytics Vidhya

FEBRUARY 15, 2023

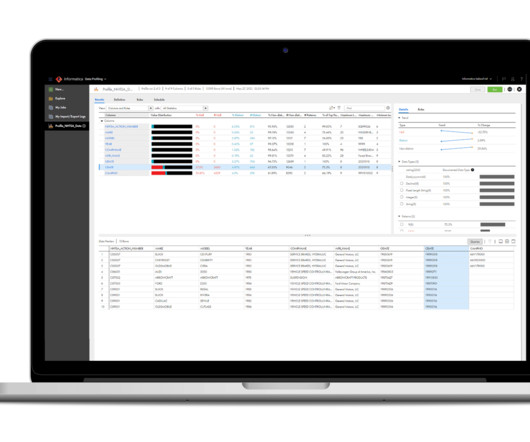

Source: dataedo.com It is designed to handle big data and is ideal for […] The post Best Practices For Loading and Querying Large Datasets in GCP BigQuery appeared first on Analytics Vidhya. Its importance lies in its ability to handle big data and provide insights that can inform business decisions.

Let's personalize your content