How to Design a Modern, Robust Data Ingestion Architecture

Monte Carlo

MAY 28, 2024

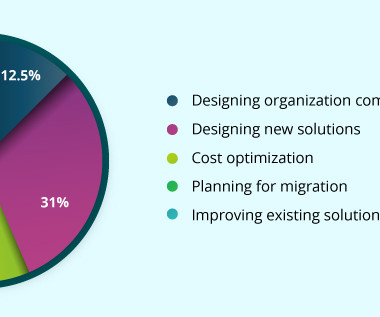

Ensuring all relevant data inputs are accounted for is crucial for a comprehensive ingestion process. In batch processing, this occurs at scheduled intervals, whereas real-time processing involves continuous loading, maintaining up-to-date data availability. Used for identifying and cataloging data sources.

Let's personalize your content