Modern Data Engineering

Towards Data Science

NOVEMBER 4, 2023

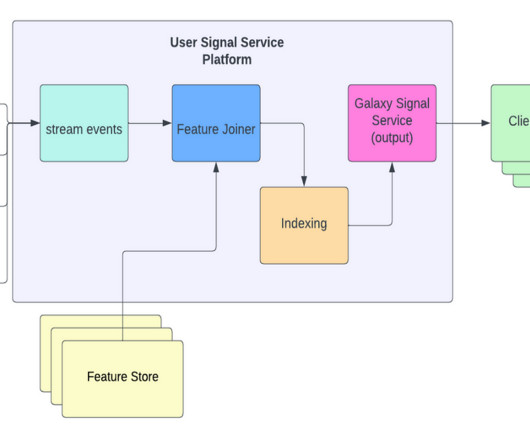

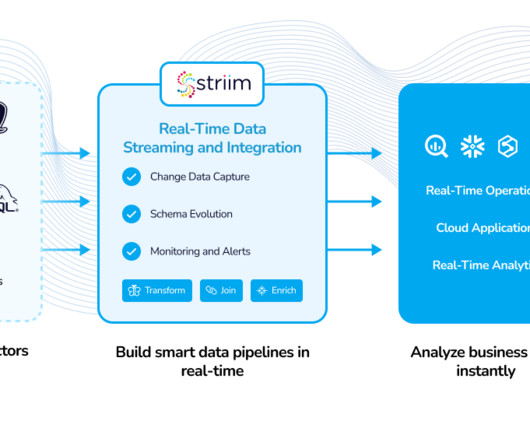

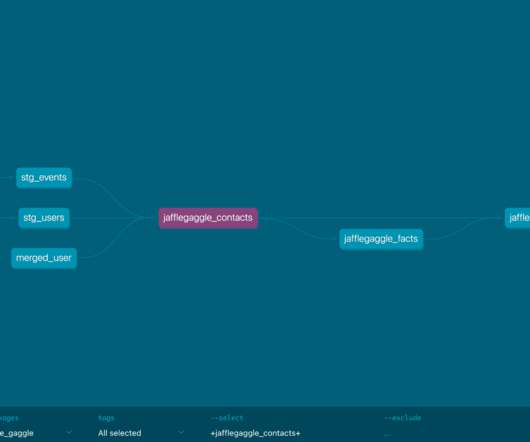

The data engineering landscape is constantly changing but major trends seem to remain the same. How to Become a Data Engineer As a data engineer, I am tasked to design efficient data processes almost every day. It was created by Spotify to manage massive data processing workloads. Datalake example.

Let's personalize your content