Building cost effective data pipelines with Python & DuckDB

Start Data Engineering

MAY 28, 2024

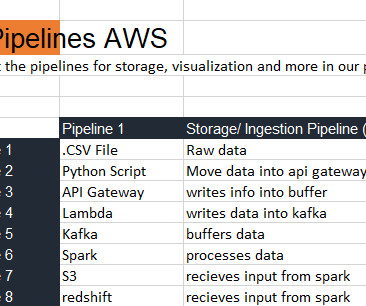

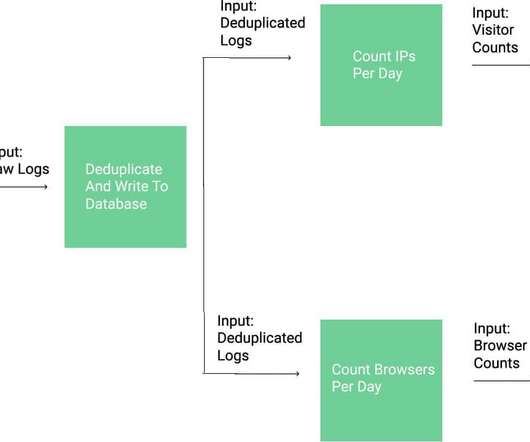

Building efficient data pipelines with DuckDB 4.1. Use DuckDB to process data, not for multiple users to access data 4.2. Cost calculation: DuckDB + Ephemeral VMs = dirt cheap data processing 4.3. Processing data less than 100GB? KISS: DuckDB + Python = easy to debug and quick to develop 4.

Let's personalize your content