Eliminate Friction In Your Data Platform Through Unified Metadata Using OpenMetadata

Data Engineering Podcast

NOVEMBER 10, 2021

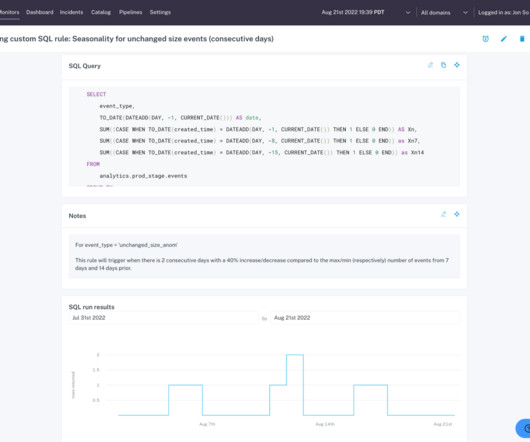

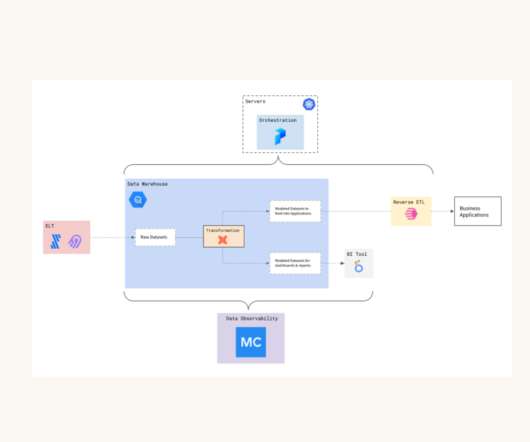

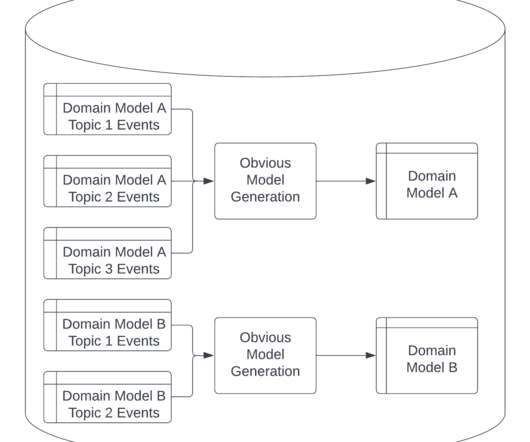

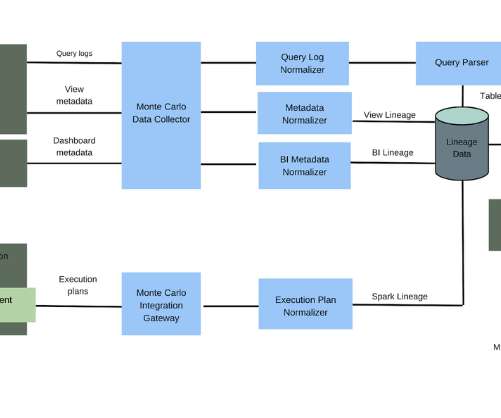

Summary A significant source of friction and wasted effort in building and integrating data management systems is the fragmentation of metadata across various tools. Start trusting your data with Monte Carlo today! Start trusting your data with Monte Carlo today! No more scripts, just SQL.

Let's personalize your content