How to install Apache Spark on Windows?

Knowledge Hut

MAY 2, 2024

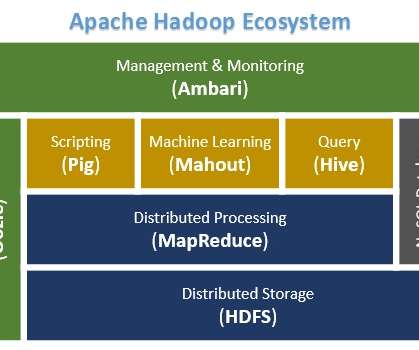

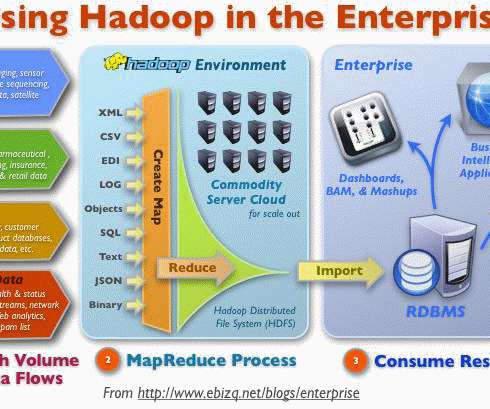

It provides high-level APIs in Java, Scala, Python, and R and an optimized engine that supports general execution graphs. It also supports a rich set of higher-level tools, including Spark SQL for SQL and structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming. For Hadoop 2.7,

Let's personalize your content