8 Data Ingestion Tools (Quick Reference Guide)

Monte Carlo

FEBRUARY 20, 2024

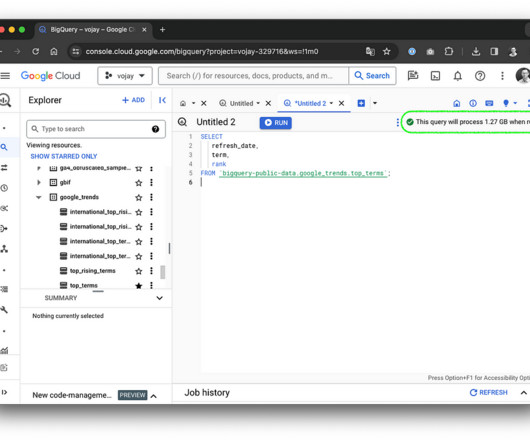

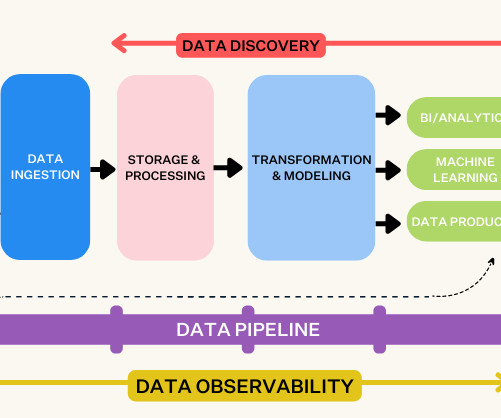

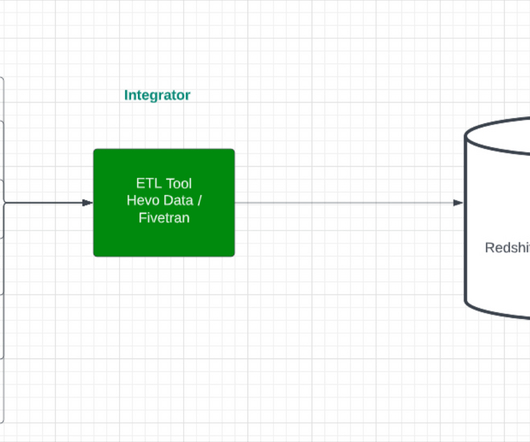

At the heart of every data-driven decision is a deceptively simple question: How do you get the right data to the right place at the right time? The growing field of data ingestion tools offers a range of answers, each with implications to ponder. Fivetran Image courtesy of Fivetran.

Let's personalize your content