How to Ensure Data Integrity at Scale By Harnessing Data Pipelines

Ascend.io

APRIL 12, 2023

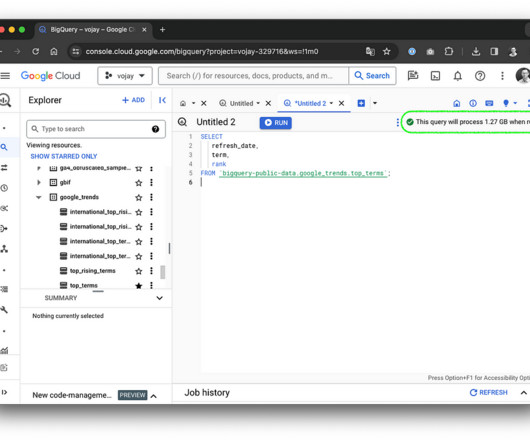

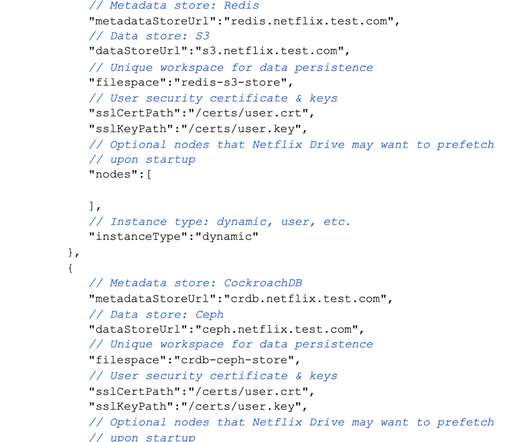

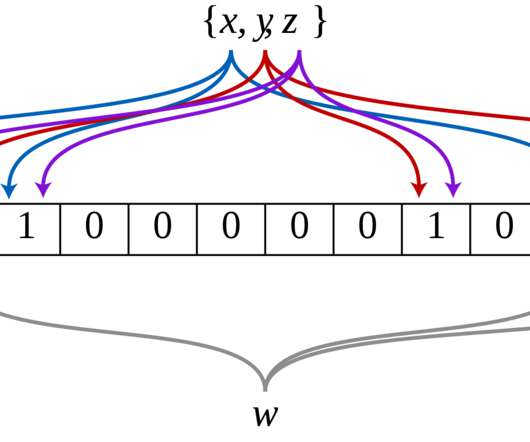

Foundational encoding, whether it is ASCII or another byte-level code, is delimited correctly into fields or columns and packaged correctly into JSON, parquet, or other file system. It should detect “schema drift,” and may involve operations that validate datasets against source system metadata, for example. In a valid schema.

Let's personalize your content