Data Vault Architecture, Data Quality Challenges, And How To Solve Them

Monte Carlo

FEBRUARY 9, 2023

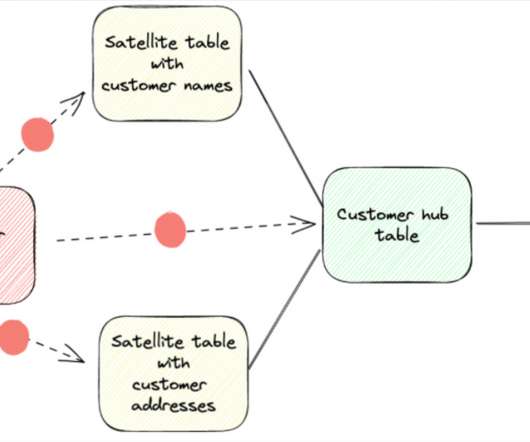

Data vault collects and organizes raw data as underlying structure to act as the source to feed Kimball or Inmon dimensional models. The data vault paradigm addresses the desire to overlay organization on top of semi-permanent raw data storage. Presentation Layer – Reporting layer for the vast majority of users.

Let's personalize your content