Apache Spark MLlib vs Scikit-learn: Building Machine Learning Pipelines

Towards Data Science

MARCH 9, 2023

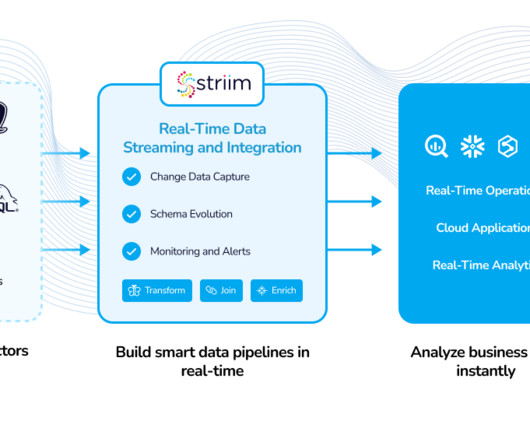

Code implementations for ML pipelines: from raw data to predictions Photo by Rodion Kutsaiev on Unsplash Real-life machine learning involves a series of tasks to prepare the data before the magic predictions take place. And that’s it. link] Time to meet the MLLib.

Let's personalize your content