Containerizing Apache Hadoop Infrastructure at Uber

Uber Engineering

JULY 22, 2021

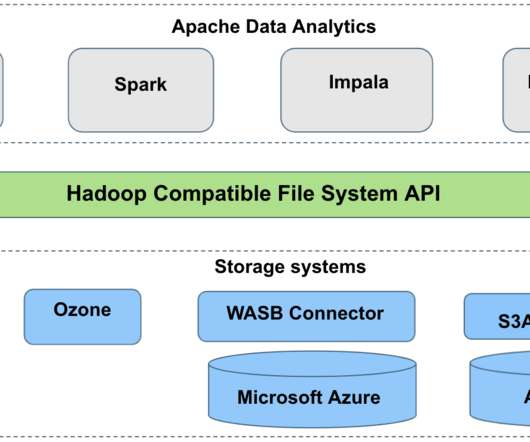

As Uber’s business grew, we scaled our Apache Hadoop (referred to as ‘Hadoop’ in this article) deployment to 21000+ hosts in 5 years, to support the various analytical and machine learning use cases.

Let's personalize your content