What Are the Best Data Modeling Methodologies & Processes for My Data Lake?

phData: Data Engineering

SEPTEMBER 19, 2023

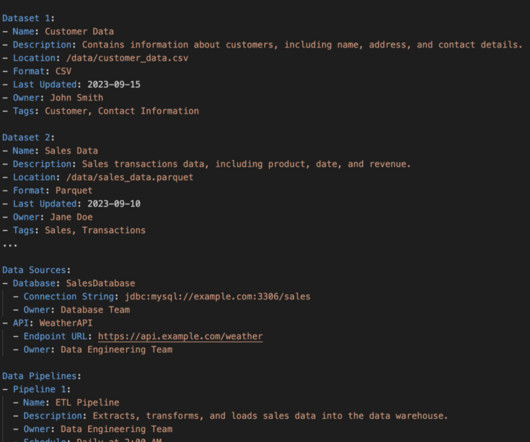

By employing robust data modeling techniques, businesses can unlock the true value of their data lake and transform it into a strategic asset. With many data modeling methodologies and processes available, choosing the right approach can be daunting. Want to learn more about data governance?

Let's personalize your content