What Are the Best Data Modeling Methodologies & Processes for My Data Lake?

phData: Data Engineering

SEPTEMBER 19, 2023

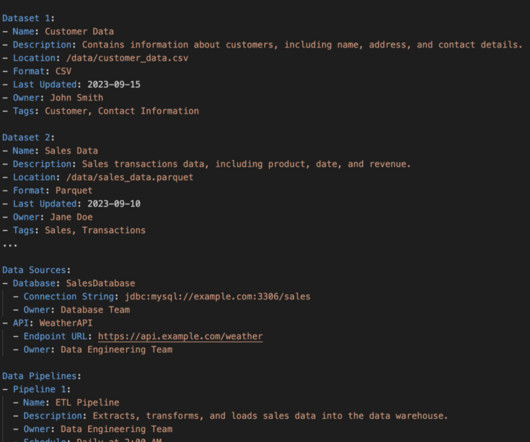

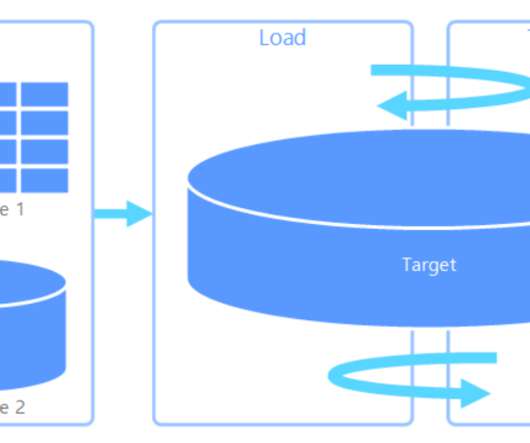

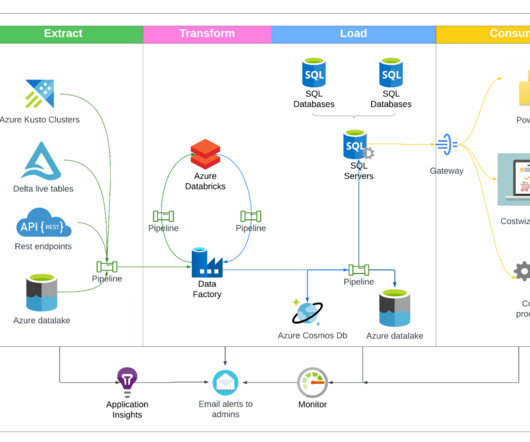

With the amount of data companies are using growing to unprecedented levels, organizations are grappling with the challenge of efficiently managing and deriving insights from these vast volumes of structured and unstructured data. What is a Data Lake? Consistency of data throughout the data lake.

Let's personalize your content