Druid Deprecation and ClickHouse Adoption at Lyft

Lyft Engineering

NOVEMBER 29, 2023

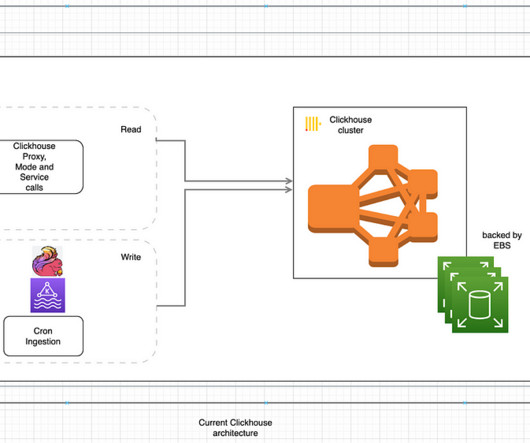

Sub-second query systems allow for near real-time data explorations and low latency, high throughput queries, which are particularly well-suited for handling time-series data. In this particular blog post, we explain how Druid has been used at Lyft and what led us to adopt ClickHouse for our sub-second analytic system.

Let's personalize your content