Data News — 2 years anniversary

Christophe Blefari

MAY 19, 2023

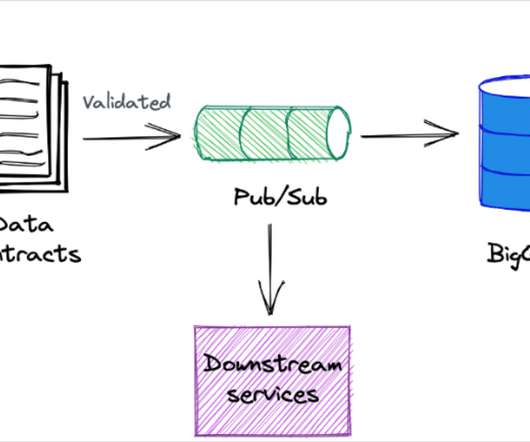

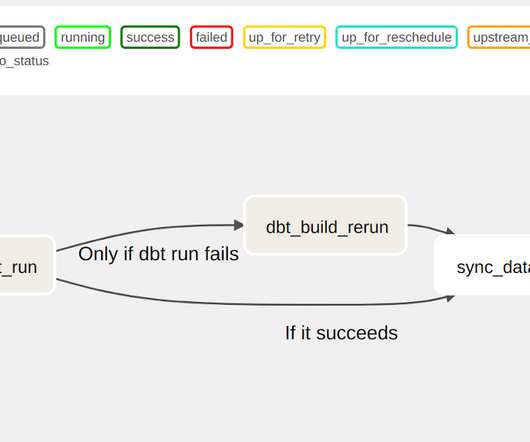

One day, I decided to save the links on a blog created for the occasion, a few days later, 3 people subscribed. During my time at Kapten, we built a data stack with Airflow, BigQuery and Metabase + Tableau. I was coming from the Hadoop world and BigQuery was a breath of fresh air.

Let's personalize your content