How Snowflake Enhanced GTM Efficiency with Data Sharing and Outreach Customer Engagement Data

Snowflake

APRIL 9, 2024

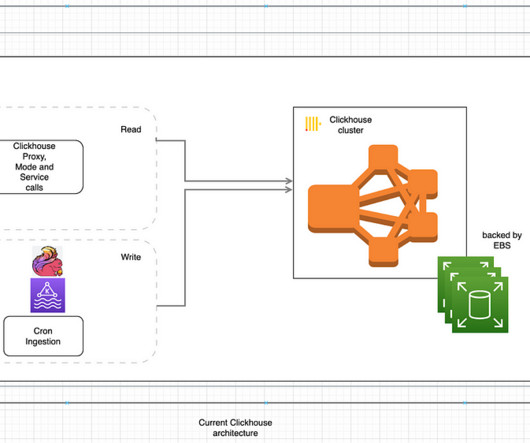

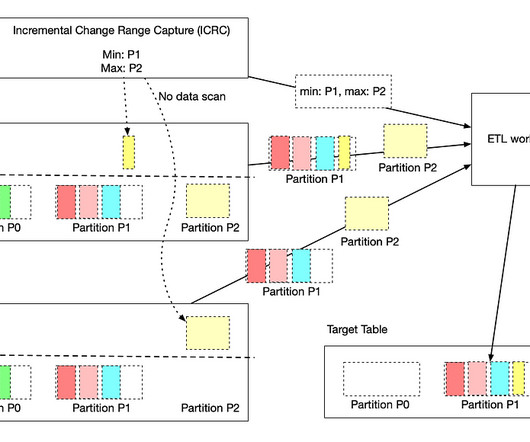

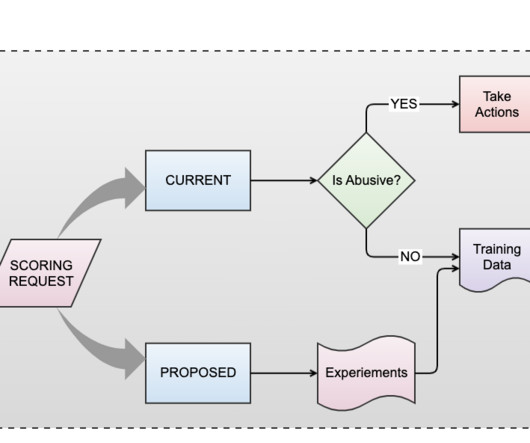

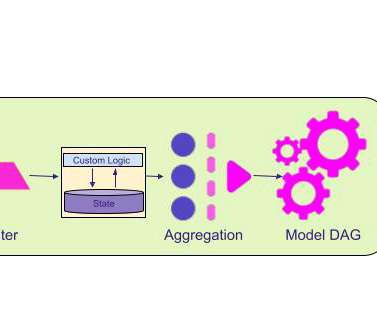

However, that data must be ingested into our Snowflake instance before it can be used to measure engagement or help SDR managers coach their reps — and the existing ingestion process had some pain points when it came to data transformation and API calls. Each of these sources may store data differently.

Let's personalize your content