How to Design a Modern, Robust Data Ingestion Architecture

Monte Carlo

MAY 28, 2024

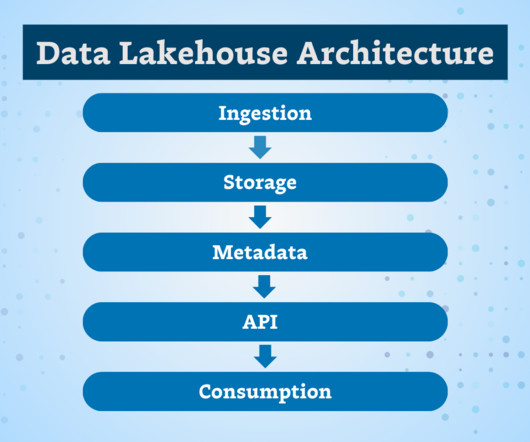

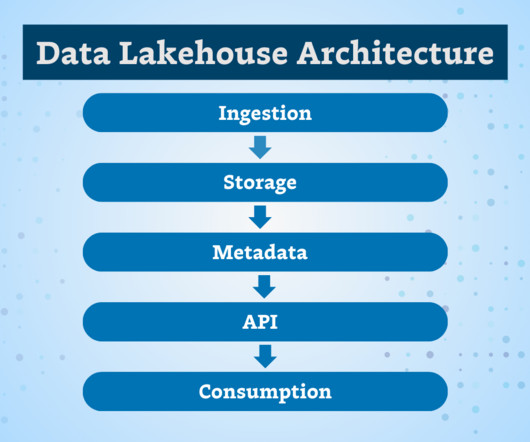

Data Storage : Store validated data in a structured format, facilitating easy access for analysis. Data Extraction with Apache Hadoop and Apache Sqoop : Hadoop’s distributed file system (HDFS) stores large data volumes; Sqoop transfers data between Hadoop and relational databases.

Let's personalize your content