Data Pipeline- Definition, Architecture, Examples, and Use Cases

ProjectPro

DECEMBER 7, 2021

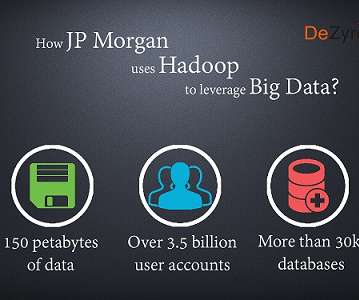

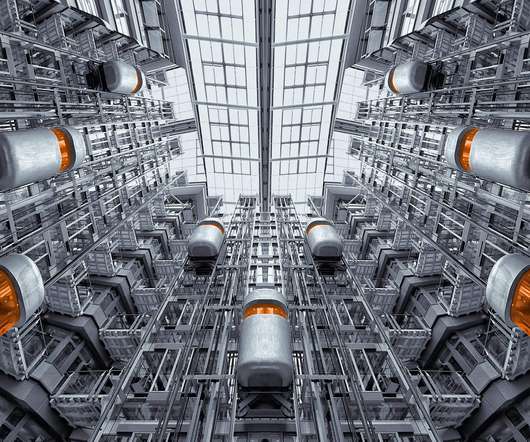

In broader terms, two types of data -- structured and unstructured data -- flow through a data pipeline. The structured data comprises data that can be saved and retrieved in a fixed format, like email addresses, locations, or phone numbers. What is a Big Data Pipeline?

Let's personalize your content