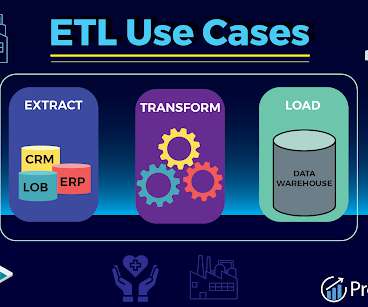

A Data Mesh Implementation: Expediting Value Extraction from ERP/CRM Systems

Towards Data Science

FEBRUARY 6, 2024

ERP and CRM systems are designed and built to fulfil a broad range of business processes and functions. This generalisation makes their data models complex and cryptic and require domain expertise. As you do not want to start your development with uncertainty, you decide to go for the operational raw data directly.

Let's personalize your content