Implementing Data Contracts in the Data Warehouse

Monte Carlo

JANUARY 25, 2023

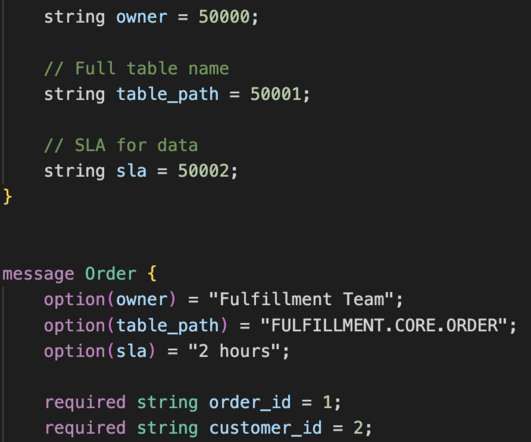

Batch in the warehouse Data warehouses tend to operate in a batch environment rather than using stream processing like we do when moving data from production services. For streaming, we typically process records at an individual level. Here is an example of a simple Orders table contract defined using protobuf.

Let's personalize your content