Top 8 Interview Questions on Apache Sqoop

Analytics Vidhya

FEBRUARY 1, 2023

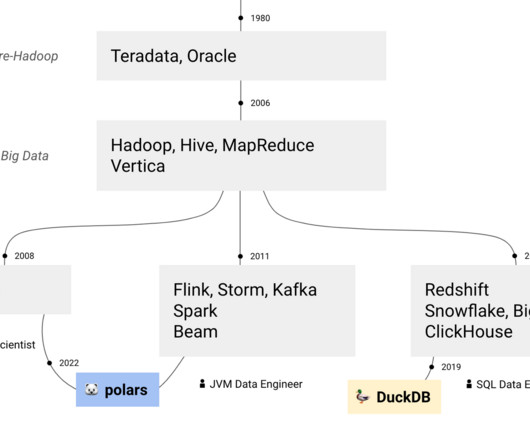

Introduction In this constantly growing technical era, big data is at its peak, with the need for a tool to import and export the data between RDBMS and Hadoop. Apache Sqoop stands for “SQL to Hadoop,” and is one such tool that transfers data between Hadoop(HIVE, HBASE, HDFS, etc.)

Let's personalize your content