What is Data Extraction? Examples, Tools & Techniques

Knowledge Hut

JANUARY 30, 2024

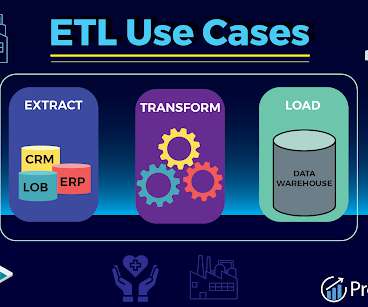

Database Queries: When dealing with structured data stored in databases, SQL queries are instrumental for data extraction. ETL (Extract, Transform, Load) Processes: ETL tools are designed for the extraction, transformation, and loading of data from one location to another.

Let's personalize your content