Sqoop vs. Flume Battle of the Hadoop ETL tools

ProjectPro

OCTOBER 28, 2015

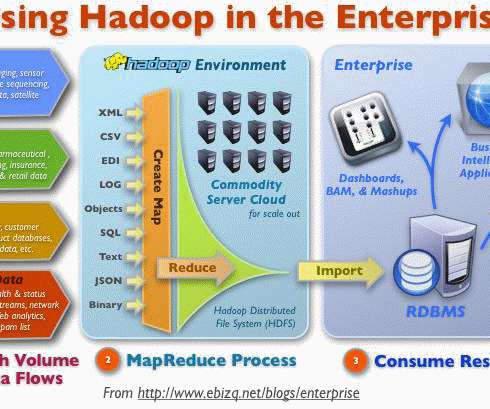

Apache Sqoop and Apache Flume are two popular open source etl tools for hadoop that help organizations overcome the challenges encountered in data ingestion. Table of Contents Hadoop ETL tools: Sqoop vs Flume-Comparison of the two Best Data Ingestion Tools What is Sqoop in Hadoop?

Let's personalize your content