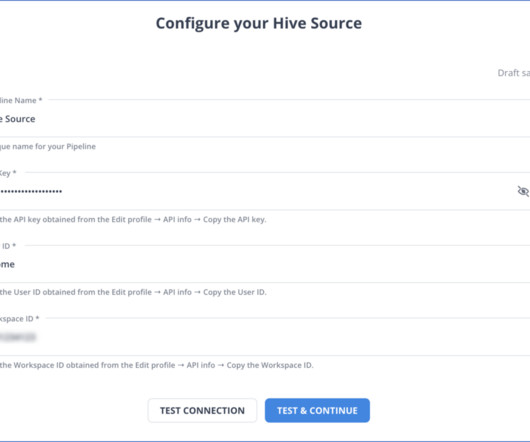

Hive MySQL Replication: 2 Simple and Easy Methods

Hevo

APRIL 4, 2024

If you have large datasets in a cloud-based project management platform like Hive, you can smoothly migrate them to a relational database management system (RDBMS), like MySQL. In today’s data-driven world, efficient workflow management and secure storage are essential for the success of any project or organization.

Let's personalize your content